Simulating 54 qubits with Qrack – on a single GPU

As lead developer of the Qrack project, I recently flew to Bellevue, WA, to present the first formal report ([arXiv:2304.14969]) on comparative benchmarks of quantum computer simulator software, with unitaryfund/qrack and unitaryfund/pyqrack, to IEEE Quantum Week ‘23, on behalf of authors on the Qrack and Unitary Fund teams. We were thrilled to get the opportunity to subject Qrack to peer review and criticism, and to introduce the open source project to a wider professional and academic community! Get started with Qrack from the docs here, or dive right into its support for a simple but powerful Python API here.

Qrack was founded 6 years ago by me (now technical staff member of Unitary Fund) and Benn Bollay (CTO of Fusebit) in 2017 with a relatively simple (but surprisingly overlooked) vision: create world’s best open-source quantum computer gate model simulator software for the specific use case of running “quantum” workloads (by logical programming paradigm) as quickly and efficiently as possible on any “classical” computer. Released under the “permissive copyleft” LGPL-3.0 license, Qrack has built out support to virtually all major operating systems and processor instruction sets, to ensure that the floor of global, public, “free” access to quantum workload throughput utility is never lower than the Qrack team’s best efforts, shared openly.

Before I joined Unitary Fund as a technical staff member, Qrack was awarded a Unitary Fund microgrant, and the software is now affiliated with Unitary Fund. As of the publication date of this blog post, PyQrack has over 685,000 total downloads, with 4-to-5 thousand downloads per week.

“Universally” compatible, and a “team player”

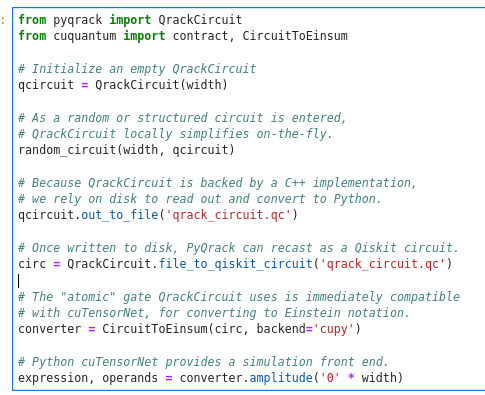

PyQrack is Qrack’s dependency-free Python ctypes wrapper, to expose Qrack shared library binaries directly for just-in-time (JIT) execution via a Python interpreter. (If you prefer the Rust language, the equivalent wrapper is Qook.) Plugins and providers are available for Qiskit, Cirq, and even the Unity video game engine, with plans to continue to expand plugin support to virtually every major quantum open source framework in the global ecosystem, as they arise. Supported platforms include all available Original Electronic Manufacturer combinations of x86_64, x86, ARMv7, and ARM64 instruction sets with Linux, Windows, Mac, iOS, and Android operating systems, as well as WebAssembly (Wasm). If users prefer to offload parts of work to conventional tensor network software, the QrackCircuit class of PyQrack can aggressively locally simplify structured and general quantum circuits, then convert to-and-from Quimb, Tensorcircuit, or (NVIDIA) cuTensorNet representations, as shown in this notebook showcasing the use of Qrack with cuQuantum as a backend.

As easy as quantum gets

Qrack has been praised for its “transparent” design to serve ease-of-use when programmers might know nothing of the specifics of the simulation methods employed and “hybridized” in Qrack: maximum theoretical performance is achieved in many or most cases by simply importing the PyQrack QrackSimulator class and instantiating it with no arguments or configuration beyond a single constructor argument that indicates qubit width of the simulator instance. When a user does this, automatically, Qrack is combining on-the-fly circuit siimplification, CPU, GPU, multi-GPU, stabilizer, and original “state factorization” simulation methods as appropriate to achieve maximum throughput and minimum memory footprint, with virtually no configuration effort by users. Should a user have reason to want Qrack’s alternative “quantum binary decision diagram” back end, or to excise any “layer” from the default stack, or to deploy Qrack in true “high performance computing” (“HPC”) applications, powerful configuration features are simple to control with a minimal set of QrackSimulator constructor arguments and environment variables, as shown in this PyQrack Jupyter notebook.

Performance

Performance details have been subjected to peer review, in the IEEE Quantum Week ‘23 conference proceedings, in a report titled “Exact and approximate simulation of large quantum circuits on a single GPU”. As the title indicates, this report focuses on benchmarks with a single GPU, specifically an NVIDIA RTX 3080 Laptop GPU for exact benchmarks and an NVIDIA A100 for approximate benchmarks, though proof-of-concept has already been demonstrated with scaling Qrack to at least 8 GPUs simultaneously.

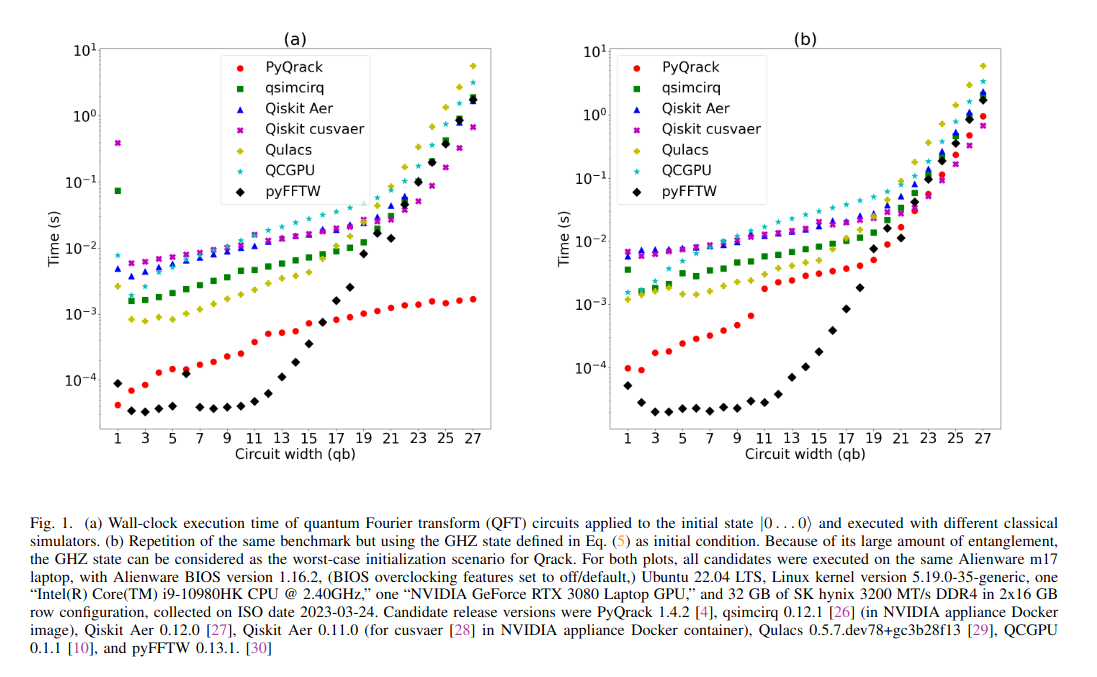

For “ideal” (vs. “approximate”) simulation benchmarks, the report focuses on comparing a set of popular GPU-accelerated quantum computer simulators, as well as a classical discrete Fourier transform solver, on the task of the “quantum” (or “discrete”) Fourier transform (QFT). Qrack exhibits unique special-case performance on permutation basis eigenstates as inputs to the QFT, carrying them out in linear complexity, while we forward a Greenberger–Horne–Zeilinger state (GHZ) input as likely Qrack’s hardest case. Comparing GHZ input across all candidates, Qrack leads over all candidates except the classical DFT solver for speed at low qubit widths, while it leads over all candidates except cuQuantum-based Qiskit Aer by less than a factor of 2 at high qubit widths.

It is worth remembering, as regards any trade-off for this very modest performance advantage of (cuStateVec-based) “cusvaer” in a relatively specific case, that the PyQrack wheels for most operating systems transfer from PyPi in about 4 to 5 MB and have no dependencies at all (besides packaging, technically made part of Python language standard by PEP); cusvaer is currently only available packaged in the cuQuantum Appliance (Docker container) with GBs of dependencies.

We show that Qrack can simulate a quantum circuit with 54 qubits on a single GPU with fidelities close to the state-of-the-art of quantum supremacy experiments. As reported in the article, while an attainable average fidelity of about ~4% on 7 depth layers of a 54-qubit “nearest-neighbor” coupler circuit might be modest, this is with less than 80 GB of total memory footprint, on a single GPU. Employing a virtualization framework to connect nodes, it should already be possible to scale Qrack to an arbitrarily high number of GPUs, increasing this fidelity figure as a function of available (GPU) memory. We are eager to explore such true “HPC” regimes. It is worth noting how far the Qrack capabilities have come, as the project started as an unfunded hobbyist project. We do not take for granted that any user has ready access and financial resources to run on 64 GPUs for over a dozen hours, for example, potentially costing tens or hundreds of thousands of US dollars, but this is exactly why the Qrack developers have focused their efforts for years on hardware available to virtually any “consumer,” including first-class support for integrated graphics accelerators and CPU-only systems as low-cost alternatives to GPUs.

At a high level, Qrack can make many of the same kinds of general performance claims as conventional tensor network approaches: for special cases of low-entanglement or “near-Clifford” simulation, it can often support hundreds or thousands of qubits in a single quantum circuit. Since Qrack robustly supports interoperability with major conventional tensor network software, it’s also incredibly easy to combine techniques in Qrack with use of those more popular back ends. However, we think many users would be surprised at how much equivalent functionality, compared to tensor networks, is already covered by Qrack, whether through relatively “novel” simulation techniques. You can learn more about Qrack’s simulation methods in the recent report, to appear in the Proceedings of IEEE QCE 2023.

Check out the repositories on the Unitary Fund GitHub organization, star and share, and get Qrackin’! You rock!